COFAR: Commonsense and Factual Reasoning in

Image Search

Image Search

Prajwal Gatti1, Abhirama Subramanyam Penamakuri1, Revant Teotia2, Anand Mishra1,

Shubhashis Sengupta3, Roshni Ramnani3

1Indian Institute of Technology Jodhpur, 2Columbia University, 3Accenture Labs

AACL-IJCNLP 2022

[Paper] [Code] [Poster] [Short Talk] [Slides] [arXiv]

Abstract

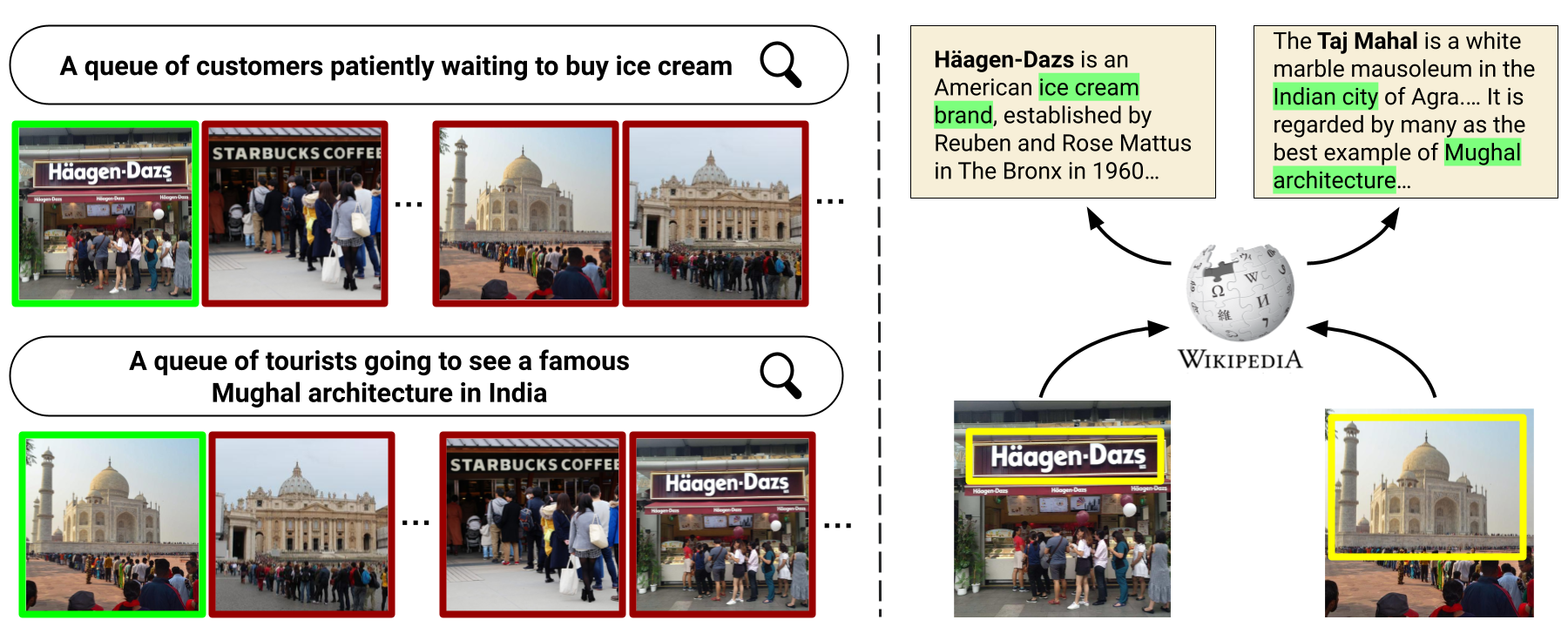

One characteristic that makes humans superior to modern artificially intelligent models is the ability to interpret images beyond what is visually apparent. Consider the following two natural language search queries – (i) “a queue of customers patiently waiting to buy ice cream” and (ii) “a queue of tourists going to see a famous Mughal architecture in India”. Interpreting these queries requires one to reason with (i) Commonsense such as interpreting people as customers or tourists, actions as waiting to buy or going to see; and (ii) Fact or world knowledge associated with named visual entities, for example, whether the store in the image sells ice cream or whether the landmark in the image is a Mughal architecture located in India. Such reasoning goes beyond just visual recognition. To enable both commonsense and factual reasoning in the image search, we present a unified framework namely Knowledge Retrieval-Augmented Multimodal Transformer (KRAMT) that treats the named visual entities in an image as a gateway to encyclopedic knowledge and leverages them along with natural language query to ground relevant knowledge. Further, KRAMT seamlessly integrates visual content and grounded knowledge to learn alignment between images and search queries. This unified framework is then used to perform image search requiring commonsense and factual reasoning. The retrieval performance of KRAMT is evaluated and compared with related approaches on a new dataset we introduce – namely COFAR.

Highlights

- Introduced a novel task of Image Search requiring Commonsense and Factual Reasoning associated with visual named entities.

- Introduced a novel dataset COFAR containing 25K+ images having 40K+ search queries.

- Proposed KRAMT: Knowledge-Retrieval Augmented Multimodal Transformer that performs named visual entity linking followed by retrieval of related factual knowledge to find the matching image from a database of images.

Dataset Downloads

Explore COFAR dataset: [Gallery]- Dataset Images [Image URLs] (585 KB)

- Train Data [Image-Caption pairs] (313 KB)

- Test Data

- COFAR-unified (285 KB)

- COFAR-brand (265 KB)

- COFAR-celeb (71 KB)

- COFAR-landmark (20 KB)

- Knowledge Bases

- KB-brand (274 KB)

- KB-celeb (553 KB)

- KB-landmark (431 KB)

- Image Feature Extraction [Script] README

Bibtex

Please cite our work as follows:

@inproceedings{cofar2022,

author = "Gatti, Prajwal and

Penamakuri, Abhirama Subramanyam and

Teotia, Revant and

Mishra, Anand and

Sengupta, Shubhashis and

Ramnani, Roshni",

title = "COFAR: Commonsense and Factual Reasoning in Image Search",

booktitle = "AACL-IJCNLP",

year = "2022",

}

Acknowledgements

Abhirama S. Penamakuri is supported by Prime Minister Research Fellowship (PMRF), Minsitry of Education, Government of India.We thank Accenture Labs for supporting this work.